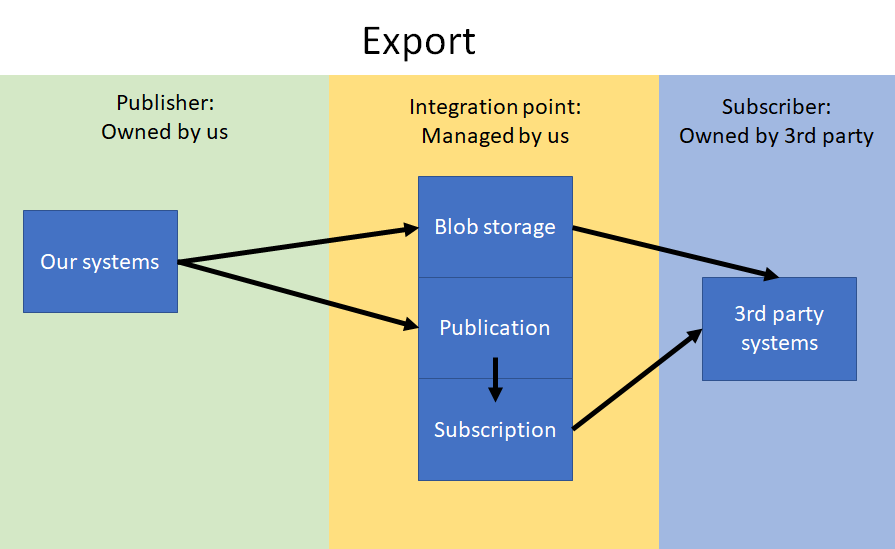

File export

Overview

- We publish data-changes as files to a publication which 3rd party can subscribe to (Pub/Sub).

- Files are published using contracts and batch/file-types as specified by the service owns that contract.

- Our Pub/Sub-solution is based on Azure ServiceBus for messages and Azure Blob Storage for message payload.

- Once the data is published, it is the 3rd partys responsibility to handle the data using its own subscription.

- Related entities can be exported in any order. 3rd party must handle situations where a dependent entity is missing during import, eg. a price arrives before the item.

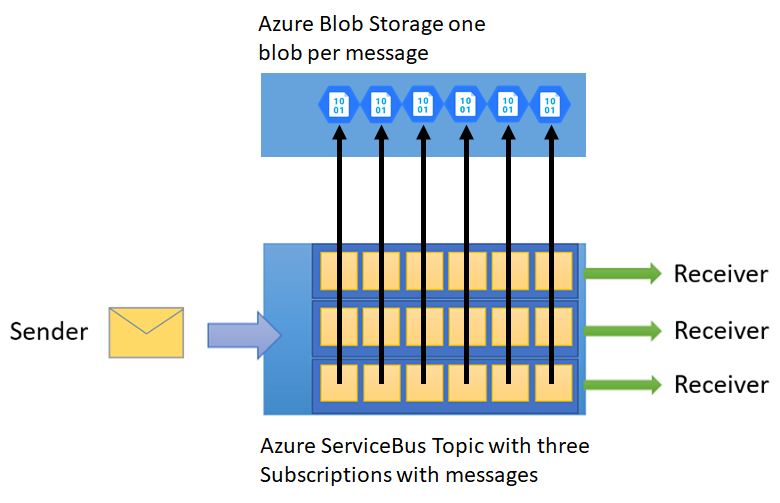

Azure ServiceBus and Blob Storage for PubSub

- We use Azure ServiceBus for publishing messages about available files.

- The message contains the file metadata (see below), including a link to the file itself stored in Azure Blob Storage.

- How files are formatted

- There are a lot of libraries for accessing Azure ServiceBus/BlobStorage, including:

- .NET / .NET Core: ServiceBus and Blob storage

- PHP: See details here

- We have created an .NET Core example implementation, which can be used as a reference implementations for other languages.

- It is recommended to get and save the ServiceBus-messages continuously, but downloading the files and processing them can be done whenever the client wishes.

- Filtering:

- By default no filtering is done, all file-types and all entities/rows in a file are exported.

- For maximum flexibility, the client should do any required filtering, like skipping any un-wanted file-types.

- We can add filtering to the subscription to only send a limited set of file-types.

- We can set up a job to filter contents of a file, which will be re-published as another file-type with less content.

File metadata

- Common metadata:

- blobType: Describes the content of the file, example: ItemChanges, ItemImportError, etc.

- correlationId: A id for tracking the file, example: 8f252380-ef1e-4e0e-8f72-5b7362d8db3b

- uri: A link to the content of the file, example: https://myaccount.blob.core.windows.net/mycontainer/myblob

- contentType: Describes the format of the file (csv, json etc.), example: text/xml, application/json, application/x-jsonlines, application/x-xmllines

- contentEncoding: Optional, describes if the file is compressed, example: gzip

- properties: Optional metadata about the file, a custom JSON-structure, dependent on the blobType.

- Example of metadata:

{

"blobType": "Gateway.ItemChanges",

"correlationId": "7e06807f-674f-4199-9140-a97b897dbaee",

"contentType": "application/x-jsonlines",

"contentEncoding": "gzip",

"uri": "https://myaccount.blob.core.windows.net/batchtoprocess/Gateway.ItemChanges/2022-07-05/7e06807f-674f-4199-9140-a97b897dbaee-ce820821-8215-427a-96eb-7f476bcfc8a4.jsonl.gz",

"properties": {}

}

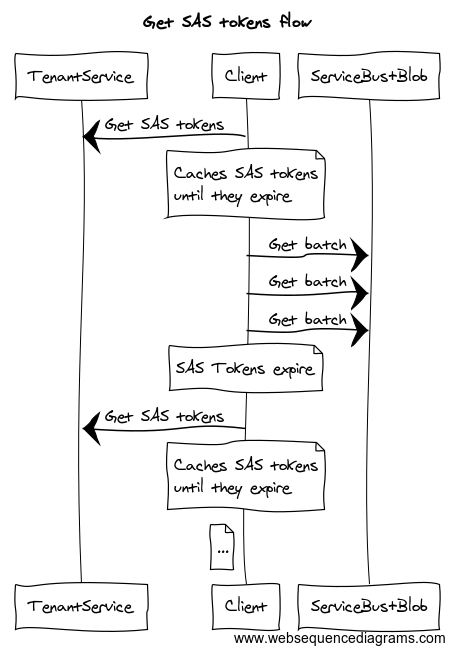

Authentication

- As all other of our Cloud products, the export integration is also secured using AAD certificates.

- See details about how to authenticate here

- However, for maximum performance, the client is given direct access to Azure Service Bus and Blob Storage using SAS tokens

- These tokens are issued by TenantService at /api/external/integration/export/temporaryendpoints and might expire at any time due to token rotation.

- Because of this, the client must automatically obtain new tokens when they expire.

- A complete example of this dance of authenticating and getting tokens can be found here

...

Image source data from https://www.websequencediagrams.com

title Get SAS tokens flowparticipant TenantService participant Client participant ServiceBus+Blob As Int

Client->TenantService: Get SAS tokens note over Client: Caches SAS tokens\nuntil they expire Client->Int: Get batch Client->Int: Get batch Client->Int: Get batch note over Client: SAS Tokens expire Client->TenantService: Get SAS tokens note over Client: Caches SAS tokens\nuntil they expire note left of Client: ...